The 1970s was not a good time for rail commuters in Boston. New roadways had opened and several rail lines shut, and those left had anemic schedules. In the 1950s, Commuter Rail was provided by private carriers (the Boston and Maine north of the city, the Boston and Albany—owned by the New York Central—on the Worcester Line and the New Haven elsewhere from South Station) in a manner similar to today on major lines, with less service on some branch lines (nearly all of which have since been abandoned). Many of these timetables from 1952 can be found here. In the late 1940s, South Station handled 125,000 passengers, far more than today. Only Chicago, Philadelphia and New York had similar or larger systems.

Significant cuts came in the 1950s, including the demise of the Old Colony Lines when the Southeast Expressway opened. After beginning to provide subsidies in the 1960s to commuter railroads, the T was in the midst of a many-years-long experiment to figure out how to best fund Commuter Rail, and service was often cut in towns which refused to pay up, leading to closed-door service where trains would bypass stations in a non-paying municipality. (This coincided with upheaval in the rail industry in general, as Penn Central and Boston and Maine both teetered on the brink of insolvency, while still operating the T’s Commuter Rail system.) Termini were cut back and on the north side outer sections of rail lines often only had a single trip in the morning and evening (or as the T would say today: “twice a day“).

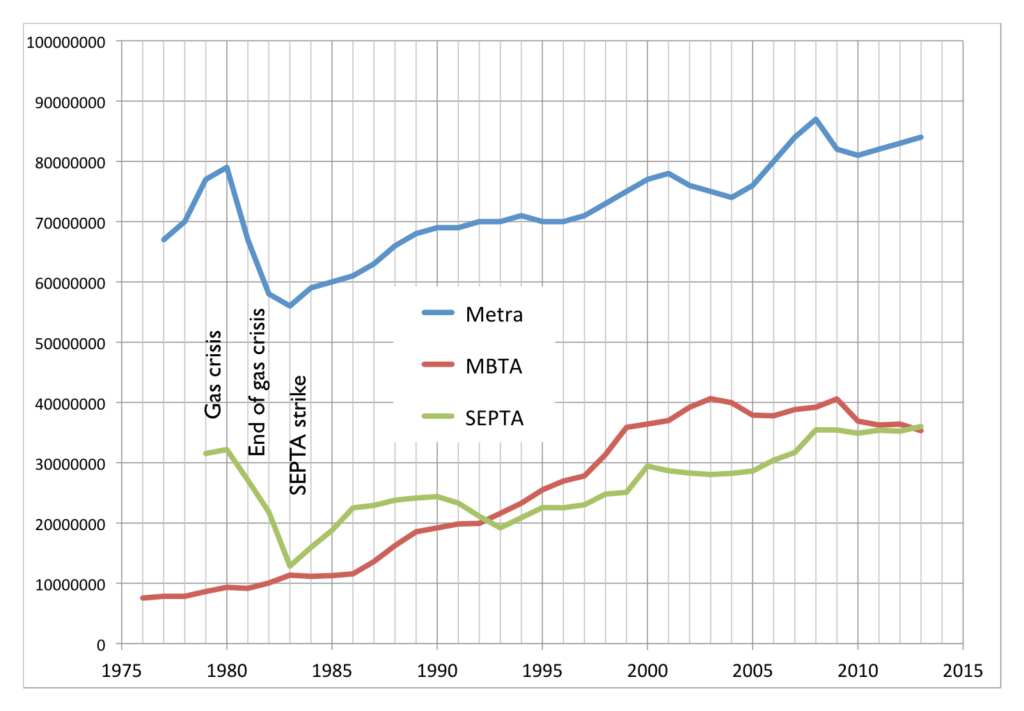

Rail lines, owned by bankrupt freight lines (even the mostly-passenger New Haven had been merged in to the ill-fated Penn Central), fell in to disrepair. Service to South Sudbury was cut in 1971 (49 minutes Sudbury to North Station; try that today), Worcester was dropped in 1975, Bedford (35 minutes to Boston) was mothballed in 1977 and Woburn in 1981. What service remained was often run on a skeleton schedule with only a handful of inbound runs in the morning and outbound in the evening. Ridership and service would better be compared to the ill-fated lines in Pittsburgh, Cleveland and Detroit. No longer was the MBTA in the same league as Metra, SEPTA or the lines serving New York. It was a hair away from disappearing all together.

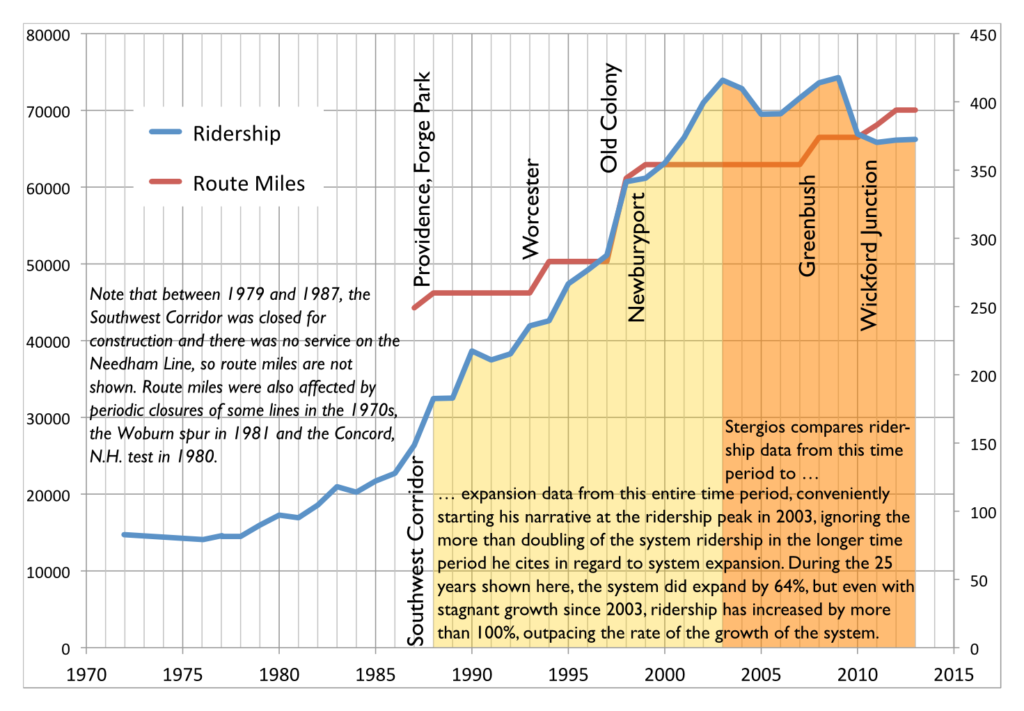

In the 1980s, however, something changed. The state bought new equipment, rebuilt track and increased the number of trips. By the 1990s, the Southwest Corridor was complete, the Old Colony Lines rebuilt (or, in the case of Kingston, overbuilt), and service reinstated to Worcester, Providence and Newburyport. Lines which saw one train a day in the 1970s (or, at times, zero) had 20, and most weekend service had been reinstated. Ridership responded: while 15,000 passengers boarded trains daily in 1972 (and most of the rest of the decade), by 2000, 60,000 passengers rode the rails each day.

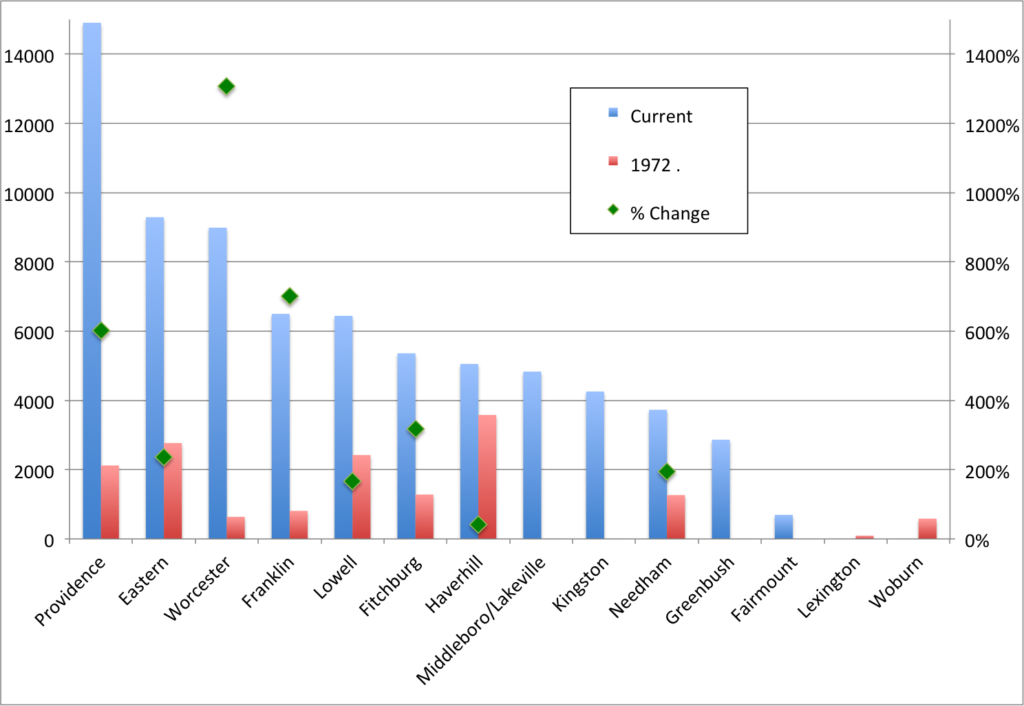

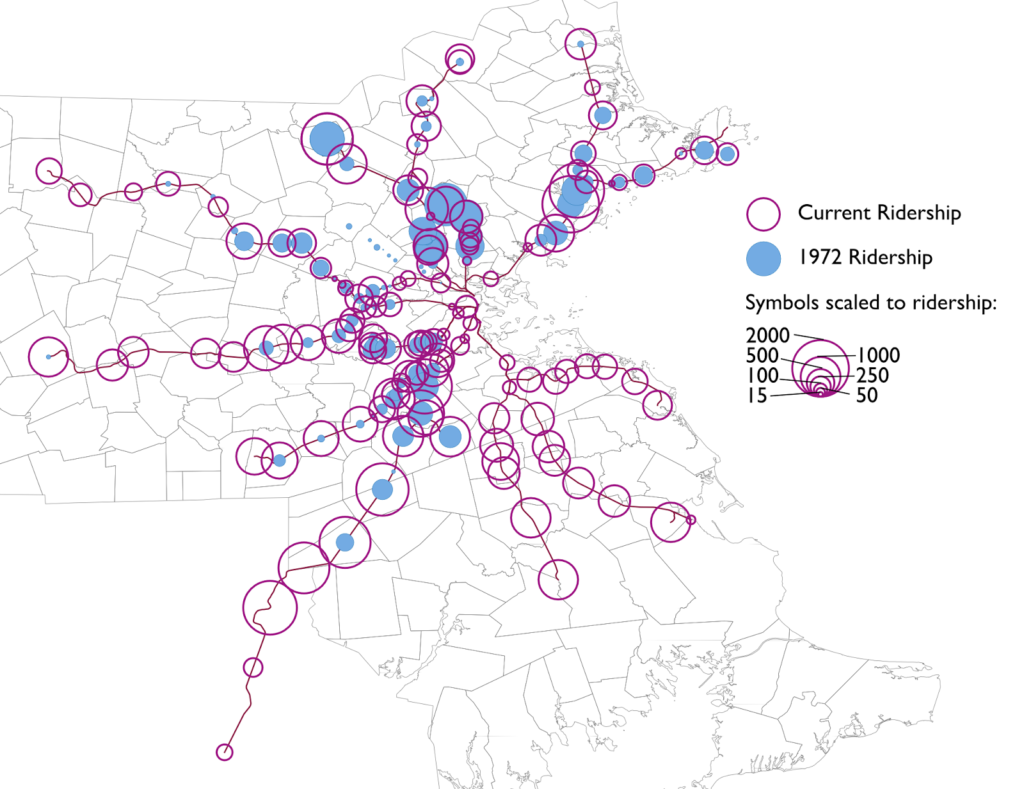

The patterns of this change are interesting. Today (note that current numbers use the median ridership for the past 10 years, based on MBTA Blue Book data to account for variability in passenger counts in single years), about 46,000 passengers use lines radiating from South Station while 26,000 passengers use those from North Station. In 1972, the numbers were much lower, and the ratios reversed: 11,000 passengers used North Station, and fewer than 5,000 used South Station lines. So while North Station has grown significantly in the past 40 years—by 150%—South Station has increased by nearly ten times (1000%). It’s hard to imagine how sleepy South Station must have been in the 1970s, as compared to the constant streams of commuters crowding the concourse today.

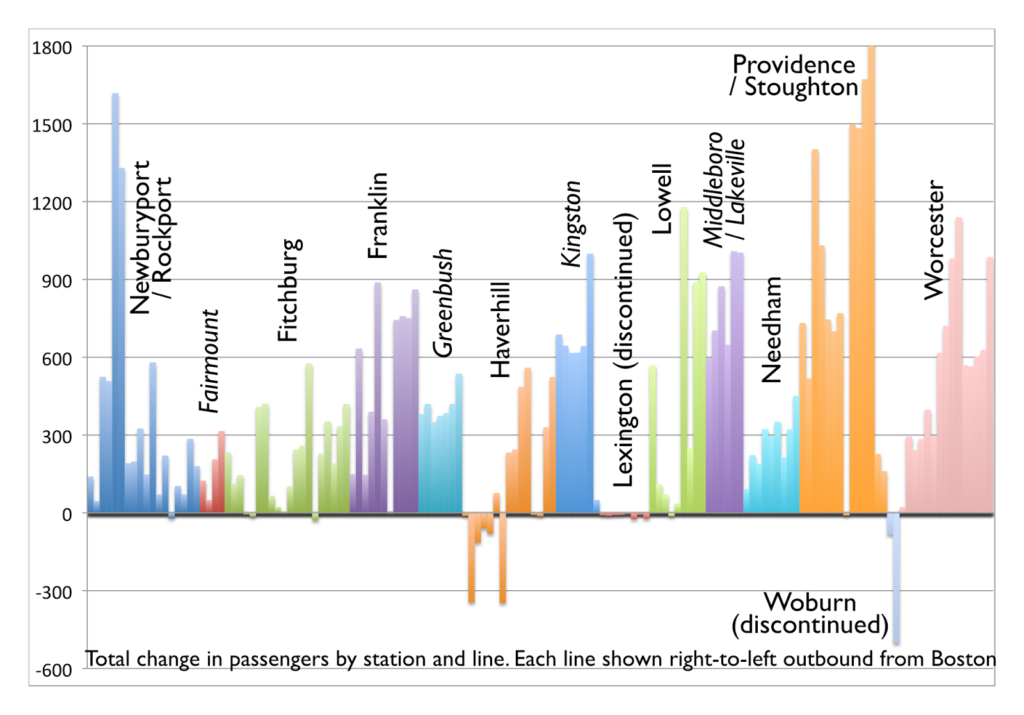

Line-by-line, even station-by-station, there are dramatic differences in the changes over the years.

Of the top five lines in 1972, four were on the North Side: Haverhill, Eastern Route (Newburyport/Rockport), Lowell, Attleboro and Fitchburg. Today, three of the top five lines are on the South Side: Providence, Eastern, Worcester, Franklin and Lowell. In 1972, the Worcester Line bested only the two-station Woburn spur and the Lexington/Bedford line, both of which were discontinued in the ensuing decade.

In 1972, the Reading Line—the single Haverhill train operated via the Wildcat Line—accounted for more than 20% of Commuter Rail’s total ridership. I-93 hadn’t yet fully opened to Boston, and the Orange Line ended in Everett rather than Oak Grove. While overall Haverhill ridership has increased, all of the gains have come from the outside of the line; there are fewer passengers at nearly every station from Reading inbound. The Worcester Line, on the other hand, carried just 600 passengers on three rush hour trains. Today it has that many trains per hour at rush hour, each of which carries 600 passengers (or more).

In absolute numbers, the biggest gains have been along the Providence Line and at Salem and Beverly, where several stations have seem gains of more than 1000 riders per day (some of which, like Providence and South Attleboro, had a baseline of zero). Many stations across the system have gained 500 riders a day or more. The inner Haverhill Line and nearby stations on the Woburn Branch stand out as the only stations to lose significant ridership; most other stations showing ridership declines are small stations which were closed (the largest, West Acton, now has a shuttle bus to South Acton, where parking is full before 7 a.m.).

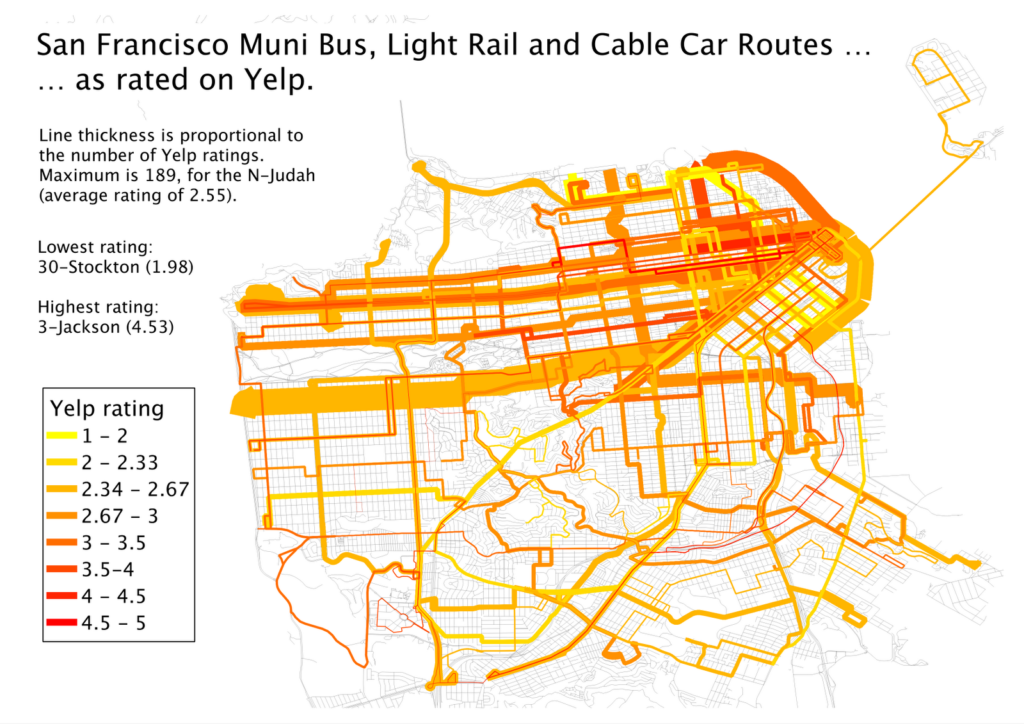

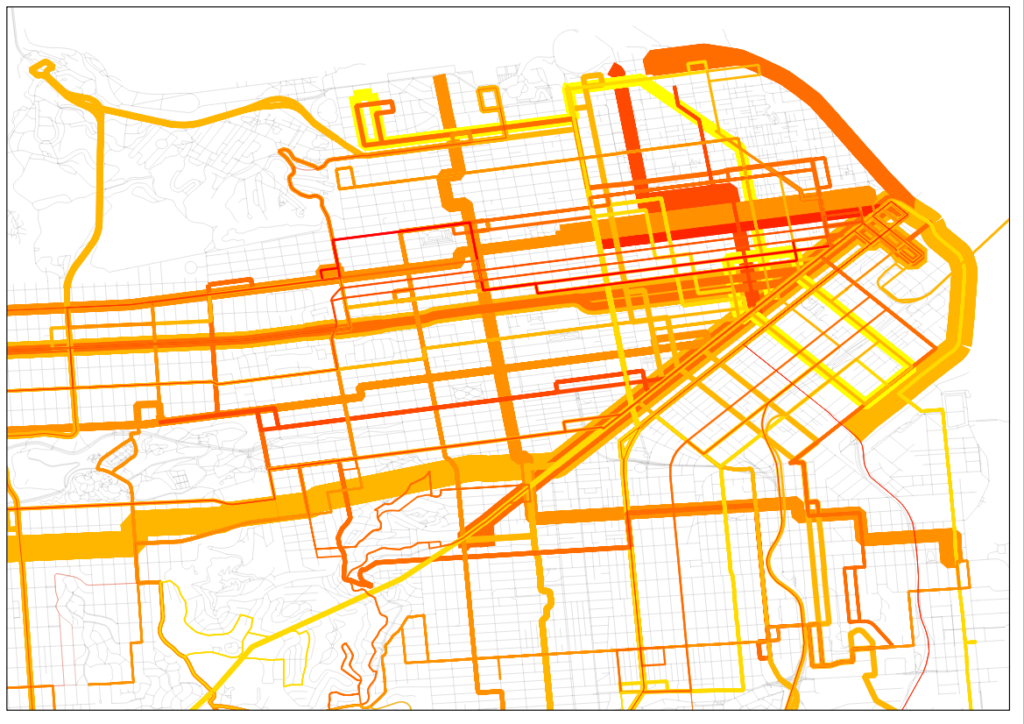

These data can also be mapped, of course. The map shows the disparate growth on different lines, and how minimal ridership was in 1972, especially south and west of the city, compared with today.

|

| Note that 1972 ridership is shown in B&M blue, since the B&M operated the majority of the system then. |

Boston came very close to losing its commuter rail system in its entirety, something which occurred in no other city (the closest was the abandonment of the non-electrified portions of the SEPTA system in the early-1980s; while Boston’s ridership began to rise in the 1980s, SEPTA and Metra saw ridership decline in the early ’80s). Had the highway moratorium not come in to place in 1972 and the Southwest Corridor been built as a highway, it may have meant the end of commuter service south of the city. Worcester ridership was minimal, and the T threatened to curtail north side service entirely—the majority of the system at that point—if it couldn’t buy the assets of the Boston and Maine.

Improvements and additions to trackage and rolling stock from the 1970s to 1990s fueled dramatic growth in the system, although it has leveled off in the past decade, a combination of higher fares and an aging physical plant. While the system is no longer on the brink of insolvency—even if it were, adding 60,000 cars to Boston’s already strained road system would be a non-starter—it needs a new round of investment as the city, and especially the downtown core, continues to grow.